Biography

- Computer Graphics

- Artificial Intelligence

- Extended Reality

- Games

Featured Publications

This paper presents a CNN-based model to estimate complex lighting for mixed reality environments with no previous information about the scene. We model the environment illumination using a set of spherical harmonics (SH) environment lighting, capable of efficiently represent area lighting. We propose a new CNN architecture that inputs an RGB image and recognizes, in real-time, the environment lighting. Unlike previous CNN-based lighting estimation methods, we propose using a highly optimized deep neural network architecture, with a reduced number of parameters, that can learn high complex lighting scenarios from real-world high-dynamic-range (HDR) environment images.

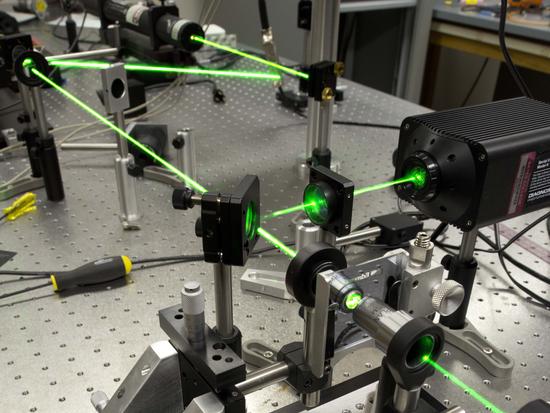

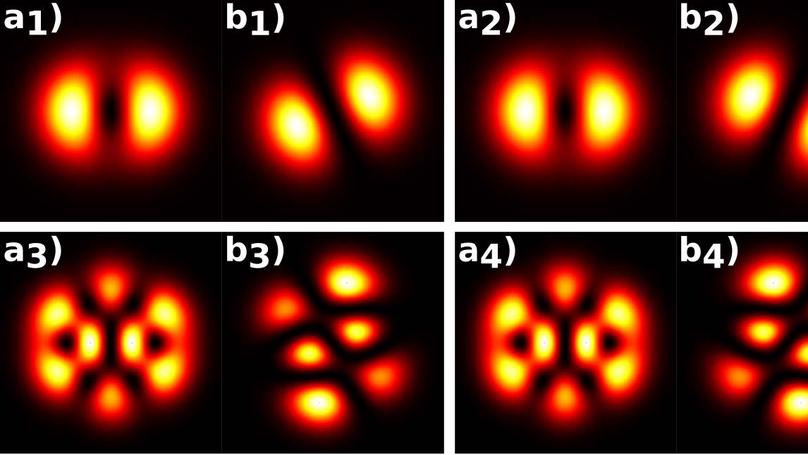

We develop a method to characterize arbitrary superpositions of light orbital angular momentum (OAM) with high fidelity by using astigmatic transformation and machine-learning processing. In order to identify each superposition unequivocally, we combine two intensity measurements. The first one is the direct image of the input beam, which is invariant for positive and negative OAM components. The second one is an image obtained using an astigmatic transformation, which allows distinguishing between positive and negative topological charges. Samples of these image pairs are used to train a convolution neural network and achieve high-fidelity recognition of arbitrary OAM superpositions.

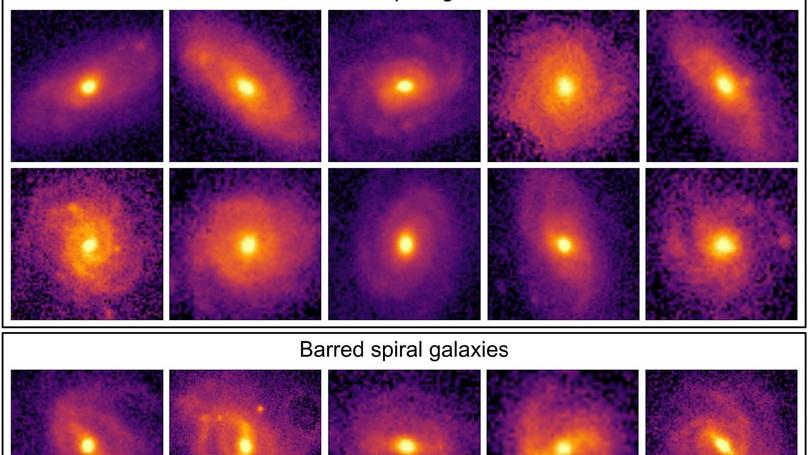

we investigate how to substantially improve the galaxy classification within large datasets by mimicking human classification. We combine accurate visual classifications from the Galaxy Zoo project with machine and deep learning methodologies. We propose two distinct approaches for galaxy morphology, one based on non-parametric morphology and traditional machine learning algorithms; and another based on Deep Learning.

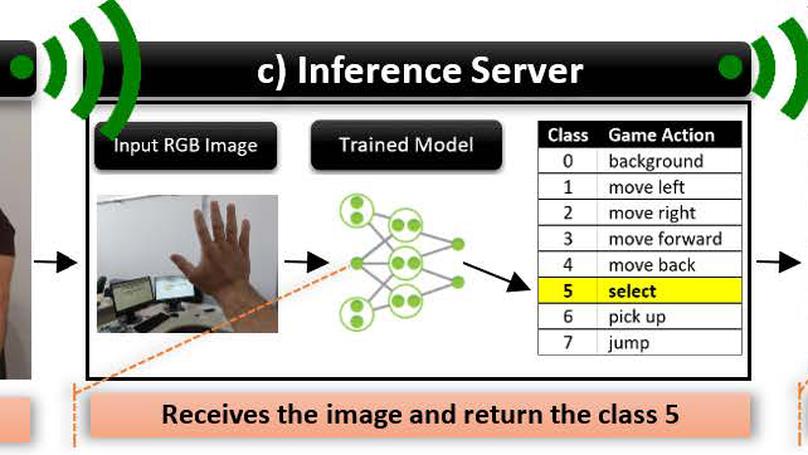

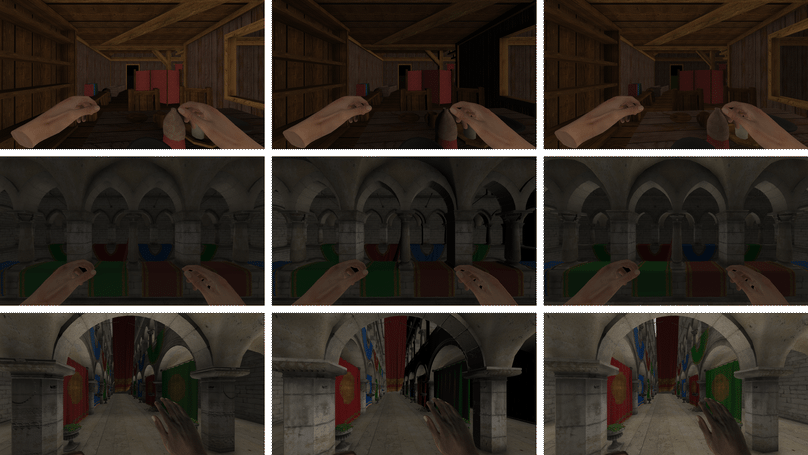

We used a DenseNet Convolutional Neural Network (CNN) architecture to perform the recognition in real-time, from both indoor and outdoor environments, not requiring any image segmentation process. Our research also generated a vocabulary, considering users’ preferences, seeking a set of natural and comfortable hand poses and evaluated users’ satisfaction and performance for an entertainment setup. Our recognition model achieved an accuracy of 97.89%.

We present the Spherical Harmonics Light Probe Estimator, a deep learning based technique that estimates the lighting setting of the real-world environment. The method uses a single RGB image and does not requires prior knowledge of the scene. The estimator outputs a light probe of the real-world lighting, represented by 9 spherical harmonics coefficients. The estimated light probe is used to create a composite image containing both real and virtual elements in an environment with a consistent illumination.

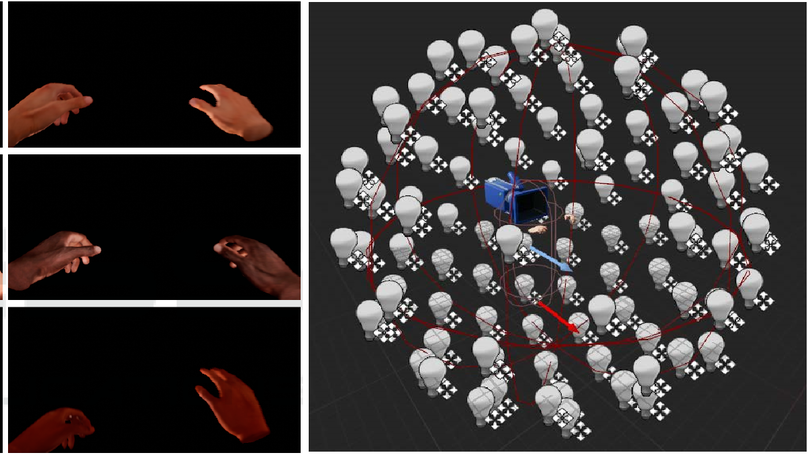

We present a deep learning based technique that estimates discrete point light source position from a single color image. The estimated light source position is used to create a composite image containing both the real and virtual environments.

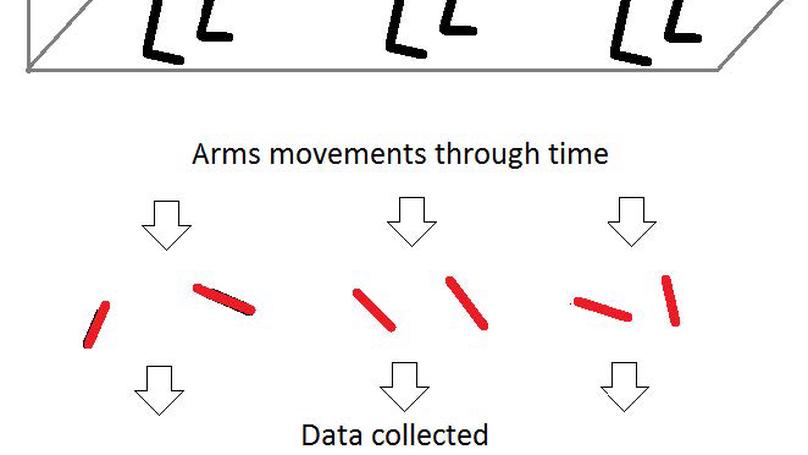

we developed a novel Recurrent Neural Network topology based on Long Short-Term Memory cells (LSTMs) that are able to classify motions sequences of different sizes.

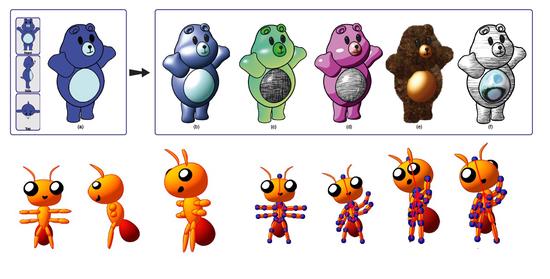

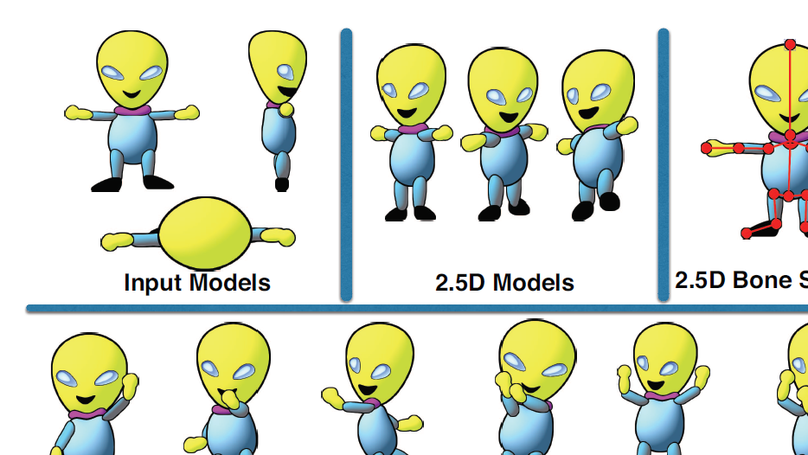

We present a novel approach that allows the user to produce both new views and new poses easily for the 2.5D Models, thus puppeteering the 2.5D Models. It makes use of a hierarchical bone structure that explores the methodology of the 2.5D Models, ensuring coherence between the bone structure and the model. The usability of the present approach is intuitive for users acquainted with previous 2.5D Modeling tools.

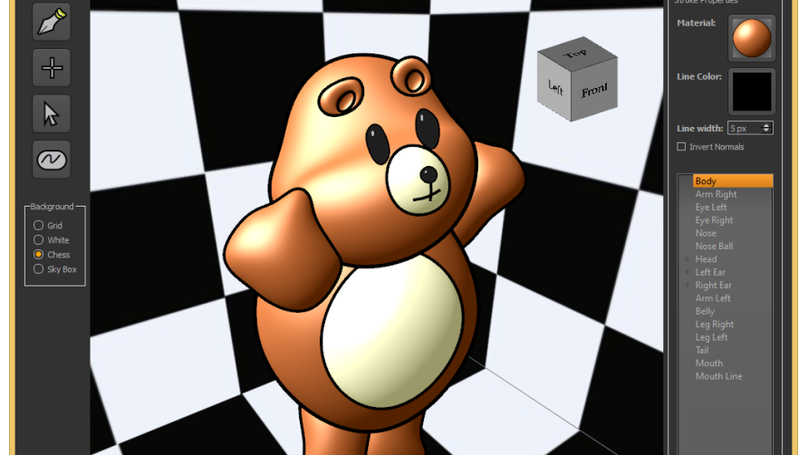

In this work, we tackle the problem of providing interactive 3D shading effects to 2.5D modeling. Our technique relies on the graphics pipeline to infer relief and to simulate the 3D rotation of the shading effects inside the 2D models in real-time. We demonstrate the application on Phong, Gooch and cel shadings, as well as environment mapping, fur simulation, animated texture mapping, and (object-space and screen-space) texture hatchings.