PEEK movement sparse data

PEEK movement sparse data

Abstract

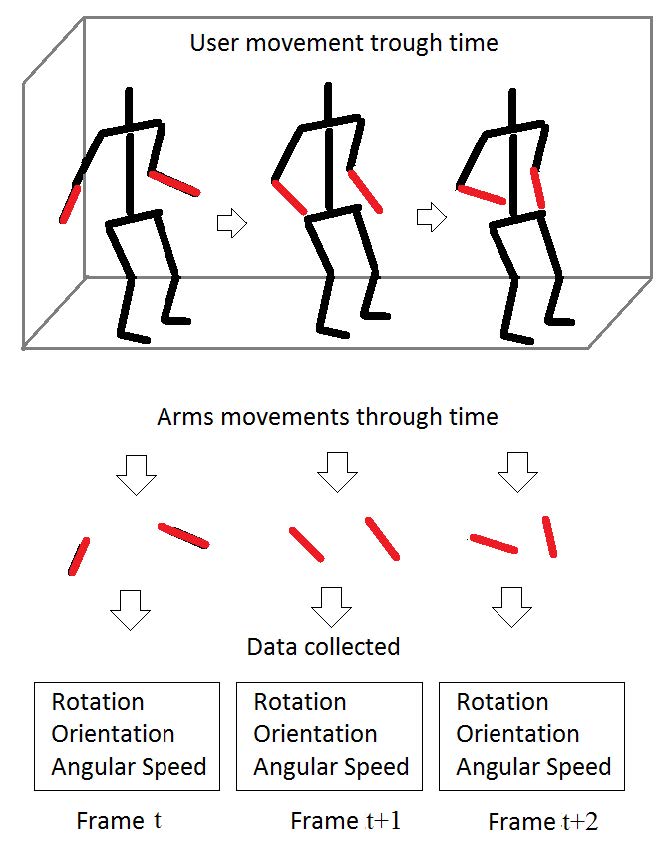

Games and other applications are exploring many different modes of interaction in order to create intuitive interfaces, such as touch screens, motion controllers, recognition of gesture or body movements among many others. In that direction, human motion is being captured by different sensors, such as accelerometers, gyroscopes, heat sensors and cameras. However, there is still room for investigation the analysis of motion data captured from low-cost sensors. This article explores the extent to which a full body motion classification can be achieved by observing only sparse data captured by two separate inherent wereable measurement unit (IMU) sensors. For that, we developed a novel Recurrent Neural Network topology based on Long Short-Term Memory cells (LSTMs) that are able to classify motions sequences of different sizes. Using cross-validation tests, our model achieves an overall accuracy of 96% which is quite significant considering that the raw data used was obtained us ing only 2 simple and accessible IMU sensors capturing arms movements. We also built and made public a motion database constructed by capturing sparse data from 11 actors performing five different actions. For comparison with existent methods, other deep learning approaches for sequence evaluation (more specifically, based on convolutional neural networks), were adapted to our problem and evaluated.